Digital Receipt #3 Ming Hin Cheung

Tuesdays 5:00-6:15pm | North Academic Center Library 1/301Y [email protected] | (646) 801-1462

After reading the excerpt from Safiya Noble – Algorithms of Oppression I came to a deeper understanding of the “numerical” influences of social grouping and its powerful impact of the things we see, believe and most importantly follow. On a general scale, the digital decision-making society call fair and as far from race and/or sex driven is and will always be created by men and women who are in fact the same thing they are attempting to not create. So to believe that they can create this vacuum of ideal prediction without any pre-judgements is false. These codes, and the underlying ethics of technology comes from minds that are asked to predict the future. The only way you can make a proper hypothesis, is by studying the past and present to make an educated guess of what the future will be. If this is in fact true, you cannot just blur the information of all the “others” of civilization no matter the time period.

In the excerpt there was a part that stated, “At the very least, we must ask when we find these kinds of results, Is this the best information? For Whom?”. This short line contended all that algorithms represents. To ask a program, to make sure you take in consideration all people from near and far before making your decision is impossible. So ultimately, these outcomes made will not only be for a specific group but it was also made by a specific group adding to the inaccuracy it has for all types of individuals within the huge spectrum of men and women which itself has blurred lines of identifications.

In the book “Algorithms of Oppression”, the author, Safiya Umoja Noble investigates the different oppressed groups in America and relates it to the internet and search algorithms. Her argument focuses around algorithms that are supposed to be nonobjective and free from human bias. She claims that many large, corporate companies that control the algorithms for search engines are infiltrated with workers and engineers that have bias while designing them; sexism, racism, etc… She argues that this unfairly affects minorities and leaves them with more challenges.

This is tough for me to respond to. I am a Caucasian male and will admit I have yet to face any of these challenges listed within the pages of Safiya Noble’s book. That doesn’t mean I haven’t faced challenges in my life, they are just different then others.

It is unfortunate that some use their power to control the narrative. In this case, Google engineers working on software algorithms are embedding their personal bias into the code. I don’t believe this defines Google as whole, there are always bad apples in a group. Oppressive issues shouldn’t be ignored, people need to be held accountable for their actions. At the same time those people shouldn’t define the entire group which often happens.

I enjoyed reading excerpts from Safiya Noble’s book and feel I gained valuable perspective into what minorities deal with daily. The examples she gave such as google search results was enlightening and I agree that these algorithms should be neutral and objective. I believe the author has ultimately brought light to a situation that needed attention and it has and will continue to make a difference. Her goal was to open dialect on the topic and she undoubtedly accomplished that because we can talk about it on our blog! Looking forward to reading other responses!

In the show Star trek, they were debating if Data, a andriod, can be seen as a person with the same rights as a human, At the beginning of the argument, I beleived that andriods dont have any rights but the person defending Data changed my mind. He convinced me that Data can have right becuase he is intellegents, self-aware, and has a concussion. He proved to me that all these traits can be seen in Data as well as another statement said by him. He stated, ” Humans give birth to other humans that also have rights”. Why can andriods that are also created by humans also have the same rights. These are two great points made in the show, but I still beleive that even though AIs are great and all, they have the power to take over humasn because they are able to think many steps ahead of us as well as are able to learn faster than us humans. On the other hand, the excerpt from the book tells us that the internet isnt a safe place becuass of how it was designed and programmed with algorithims that promote, “sexist and racist attitudes openly at work and beyond.”

-Tanvir Youhana

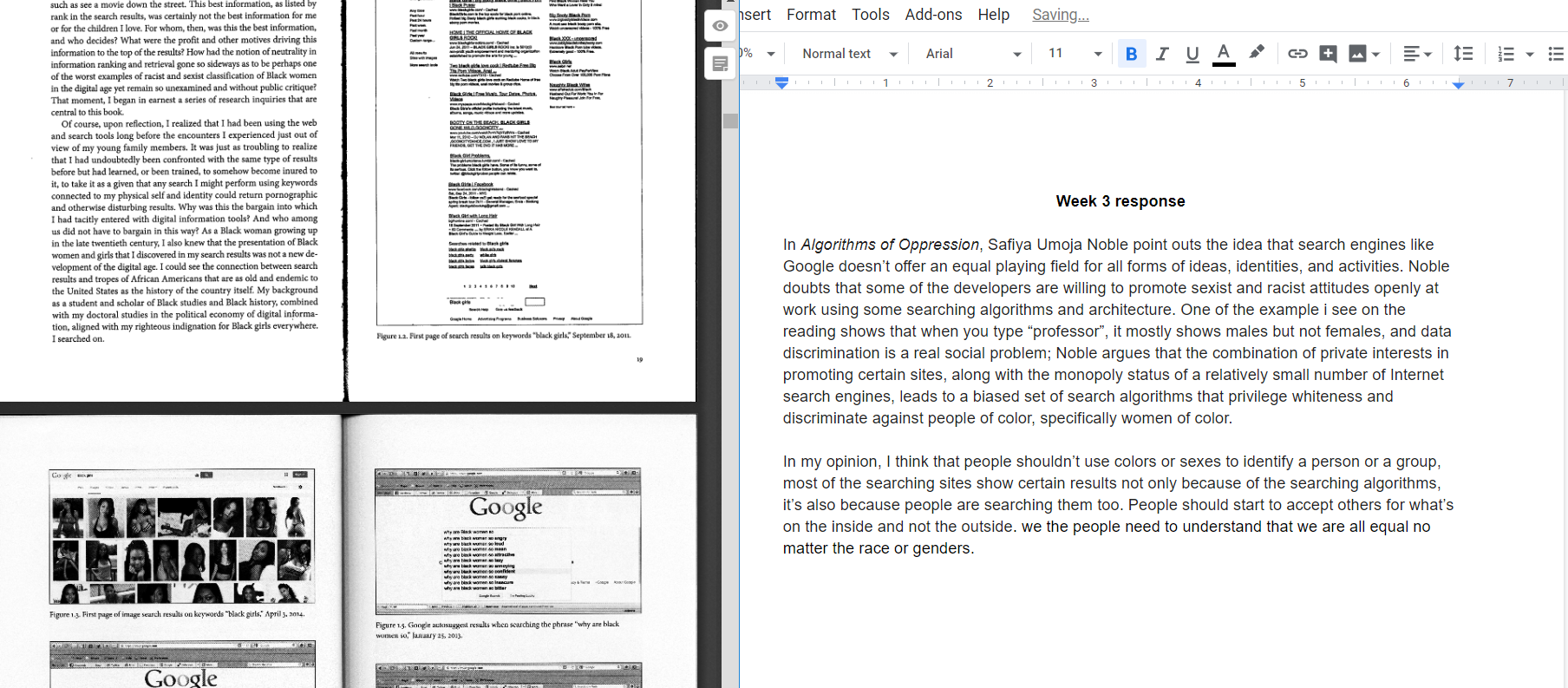

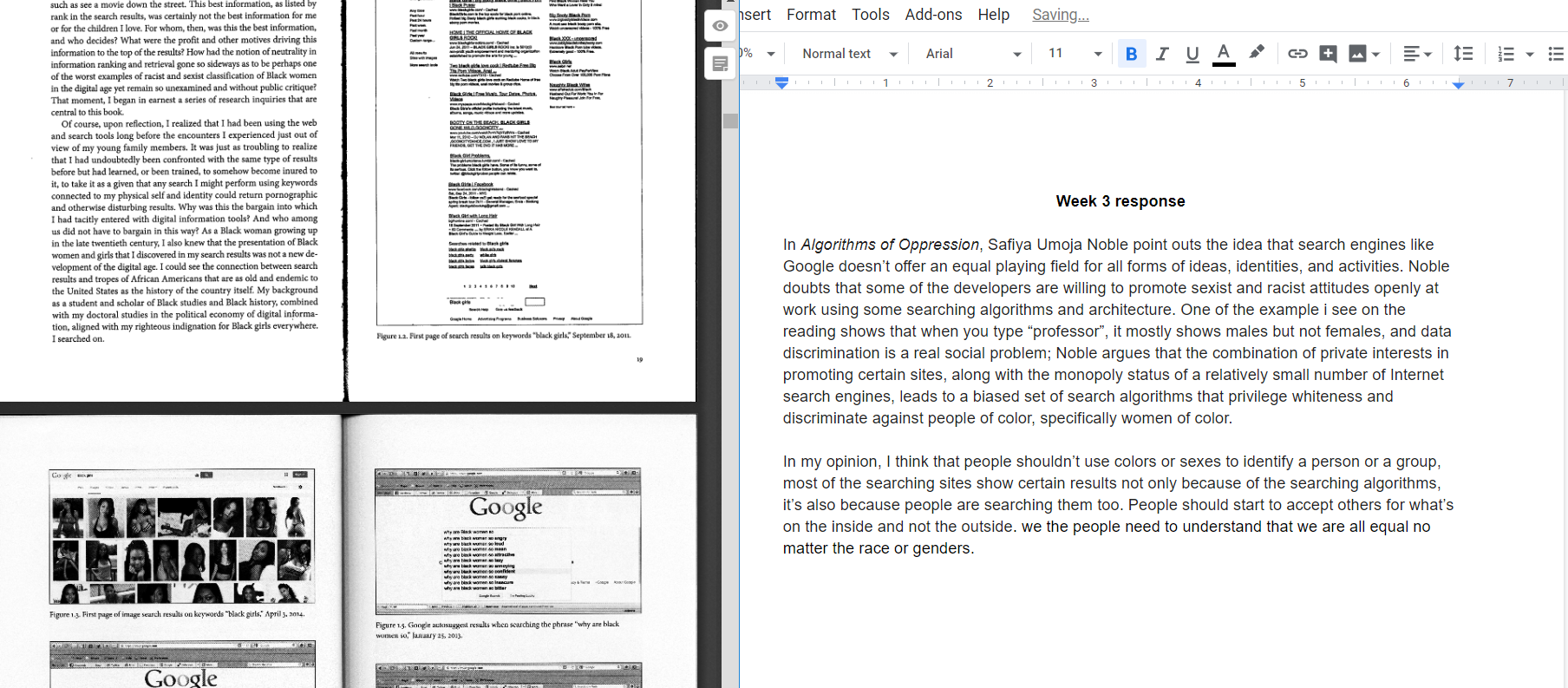

In the “SAFIYA NOBLE – Algorithms of Oppression” it mention about that in the algorithms and architectures the very people/ the most successful people are openly using the negative sexist and racist attitude when they are working. And they think that the race with the sex will have more expression of the race. And in the article it show that picture of people googling the phase “Black girls” which the result is so what I expected. The image show the google result of searching “Black girls” is all the sex and the porn stuff at the first top 5 result. And other few image highlight the idea that the color will affect the objective toward a gender. Such as the word Black people implies the negative connotation toward it, and the word white implies the positive connotation when searching “why are white/black woman so” It pop up the different connotation and objective toward the group of people. In my opinion, I think that people should no view the color as different and how bad this group is and that. If we accept the idea that the old society is putting on to people the world will never improve and will grew into war and violent just like how Hitler change Germany the objective toward Jewish. Imagine yourself in this case being the one who being apply all the negative connotation what will you think. When people thinking about the issue we often just focus on will we be the one who benefit from it as the question and the result. But do people think about this question who will be hurt from the outcome of the issue. We should put our view in other’s perspective other than being selfish and turning into “Hitler” what we consider is bad but people often don’t realize that when we spread the word we are doing what Hitler did. So be mindful about our word and though.

The show starts off with the crew members playing cards.

Reading facial expression is key to card games

I was thinking what the episode was about from just the title and I opined that it was about testing what man can accomplish.

Took a break and read the excerpt about algorithms in search engines such as google.

I believe that one day the computers will take over the world and bring humanity to extinction.

The data guy creeps me out with his artificial tone trying to sound like someone without any emotions in their voice.

AI learn from experience not from books according to what Data stated

I believe that AIs will never have true emotions like us humans.

Rights to AIs?

It is seen that Data is seen as a property of the fleet and cannot be resigned because he is a robot and not a human and doesn’t have any rights.

This attachment that some of these officers in the Starfleet have to the AI is something that is blinding them from seeing that AIs are not humans and are unpredictable.

Data is a machine that is made by humans, so it doesn’t have any rights that a human does.

The person defending data makes a good point that humans are made by other humans thus we can’t just say that those humans have no rights.

It seems that Data has found love as an AI which surprises me and make me rethink my first train of thought about AIs.

A person can be seen as someone that have the traits of intelligence, self-awareness, and a concussion

What i have read from the excerpt showed me that we feeding into the minds of the young about racism through the internet. The internet has become a place where there is no filter to anything. Also, it is not right that people making programs for search engines have the right to feed into sexist and racist attitides.

-Tanvir Youhana

Reading Awayy!!!

Reading Awayy!!!

Digital Receipt

Digital receipt#3

Digital Receipt

Sambeg Raj Subedi

ENGL 21007-S

Prof. Jesse Rice-Evans

Weekly Assign#3

02/18/2019

The Measure of a Man, Star Trek, Episode 9 was interesting to watch as it mainly focuses on an issue related to human and technology. Data, a unique character in this movie, is a machine which completely looks like a human and is constructed in such a way that, it is capable of learning, responding and making decisions. These capabilities drew the attention of Maddox and his friend Riker. They aimed to disassemble that creature and make a study in order to recreate more of such machines. This episode moves around a central idea, which is to whether allow machines to have the freedom to use their rights or not, but finally ends up providing them rights, allowing him(Data) to refuse the proposal to disassemble and make an experiment on him.

Though in this movie, a machine was given the full freedom of its right, I don’t believe in the real world, we should allow machines to make the decision of their own. These machines are operated by software and algorithms, which is developed by humans. So, it’s not necessarily true that an operation performed by machine will be 100% correct and reliable. For example, in a newspaper article published in 2016, It was mentioned that predPol, which is an algorithm designed software used to predict when and where crimes would take place, was found intentionally targeting black communities. This shows how software, algorithms and AI are being sexist and racist. Now, the AI system has conquered almost everyplace. Developers are using several algorithms in software to silently withdraw the personal data and information from the user and use in an AI system for their personal interest. For example, we might sometime wonder how Facebook is able to display ads of our interest such as a shoe, phone and bags brand we like the most. Its all because of the AI system which is able to read our brain and, displaying such ads will unknowingly lead the user to buy that product.