Week 3

Algorithms of Oppression by Safiya Noble is about how algorithms and technology have promoted racism and sexism. The author talked about the problem with Google’s search engine in order to support her argument. I found this book excerpt to be really interesting because I never really considered Google’s search engine to be racist or problematic. One example that showed the problem with Google’s algorithms was that African Americans were being tagged as apes or animals. Another example was that when searching the word N*gger on google maps, the result would be the white house. Another problem the author discussed was whether the information on Google is real. A lot of times when using searching up something on google, a paid advertisement would be the number one result. I agree that this a problem because most people would click on the first link believing it is the most popular and trusted but actually it is a paid ad.

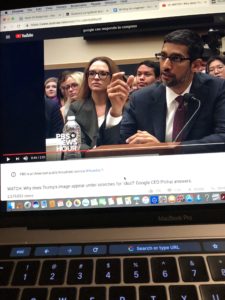

I wanted to learn more about Google’s search engine by watching a video on youtube of Google’s CEO Sundar Pichai responding to Congress. One congresswoman asked the question as to why when searching up the word idiot under images, a picture of Donald Trump comes up. Pichai said that the search engine matches the key world with millions of online pages. Google determines what pictures are the best match for the keyword based on relevancy, popularity and how other online users are using the word. Pichai also says that there is no one manually choosing pictures to match with keywords. I believe the problem is not only Google’s algorithms but people who use the internet. Google handles about 3.5 billion searches in a single day so it would be difficult to monitor racist or sexist search results. It would also be hard to program the search engine to differentiate what is racist and what is not since it is not human.